It’s on the tip of everyone’s tongue these days. The edge. But what, exactly, is the edge? Is it the edge of the operator’s network? The edge of the last mile? The home? The device? All of the above? Everyone, or every industry, seems to have their own definition which has spawned a number of other terms: mist and fog to name a few. The issue with all this uncertainty, though, is that it can create confusion and undermine the strategy and architectural design within the streaming technology workflow. This can have far ranging impacts on scale, resiliency, and even the bottom line.

In this post, we’ll explore what the edge really is and why talking about it as some magical location which improves every aspect of the streaming workflow actually hampers innovation.

So What Is “The Edge?”

The edge is, simply, the closest point on the network to the requesting device. Of course, that changes for individual use cases. For autonomous cars, that closest point might be the cellular tower. For streaming video caching, that might be the device itself. But the location of network resources, whether they are deep in the network or first in line to deal with requests, really doesn’t mean anything besides a shorter round-trip-time. The exception to this is when they aren’t discrete services, like a caching box, but more an extension of the cloud itself, such as a fabric of memory, GPU, CPU, all working together.

The Real Power of the Cloud (Edge)

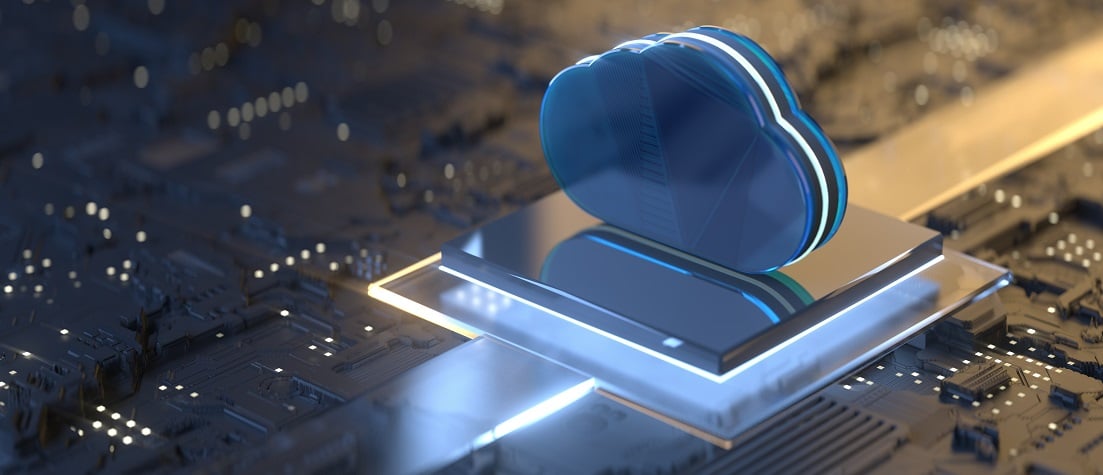

When you stop seeing the edge as a location and more as an aspect of cloud computing, the value is more apparent. When the cloud was first established, it was all about virtual instances, a way to scale up servers without having to install new boxes. But as the cloud matured, more focus was placed on distribution than on instances. Containers were the new norm as they could be spread across more servers in more places.

Today, as organizations are embracing Kubernetes and Docker as integral technologies within streaming technology stacks, the cloud is evolving even more. Just as servers were distilled into containers (with just the bare minimum operating system components to support a specific application), containers are being distilled into functions. What were once massive clusters of servers in a datacenter have effectively become compute, storage, and memory resources spread across a giant footprint of physical machines. And that’s the real power of the cloud, finally realized: a fabric of computing resources. But the question is, “does every streaming video technology belong there?”

Location Does Matter. Sometimes.

The fabric of the cloud has been pushing outward ever since the beginning. From centralized datacenters to cellular towers to home networking recruitment (like a router) to the very device itself, the distributed resources of the cloud have always moved closer to the end user. And for some components within the streaming video technology stack, closer is definitely better. For example, shorter round trip times between the requesting device and the cache with the content spells a better user experience (especially for live streams). Another example is scale. Those same caching servers are usually standard HTTP servers, responsible for responding to user requests for content. As the concurrency of users grows, the ability to scale easily within the fabric can mean the difference between stalls and HD.

What Do You Really Gain From the Edge?

Trying to architect the entire stack into this compute fabric doesn’t make much sense. Not because it can’t be done but because the value isn’t worth the effort. If it takes 500 hours to re-architect a component (at a cost of tens of thousands of dollars) but the gain is just a few milliseconds of latency, then it’s probably not worth it. Understanding the gain of optimization, whether it’s bottom line or in user satisfaction, is a critical element of architectural planning within the video technology stack. Yet, all this talk about “the edge” has everyone looking at how the entire workflow can be moved from instances to containers to serverless functions.

The Critical Consideration

Deciding to spend valuable engineering resources on transitioning workflow components to the edge isn’t just about the cost of the effort versus the gain. There’s another core question that needs to be answered: “can the new edge service be monitored effectively?”

End-to-end monitoring of the streaming workflow is, without question, an integral part of a successful streaming service. The analog to Quality-of-Service monitoring in traditional broadcast, streaming operations engineers must have visibility into every component of the workflow to be able to ferret out the root cause of performance or quality issues. But monitoring a serverless function is much different than monitoring a virtualized instance or even a containerized technology. As such, streaming platform operators must take into account their ability to gather data from components that they tap for migration into that compute fabric and deployment “at the edge.”

Bright and Shiny Doesn’t Save The Day

It’s probably a foregone conclusion that developers, being who they are, will test new technologies and newer ways to deploy them. Discoveries of how to deploy streaming components as serverless functions will influence architectural decisions and strategies for months and years to come. But failing to take into consideration the answers to critical questions like “is it worth the effort” and “can we monitor it” will result in wasted resources and, ultimately, a less stable and streaming service. That’s why we have to stop talking about the edge. Until we take that word out of the conversation, it will continue to be a beacon. The idea of making a technology more distributed, more elastic, more flexible and scalable, is great. The idea of moving a technology to a specific place in the network and expecting there to be gains just because the technology is located in that place, isn’t great.

To truly take advantage of network location for workflow components, you need a strategy. Put all of your workflow components on a spreadsheet. Assess the value of converting them into microservices or serverless functions deployable closer to the end user. Then calculate the cost of doing so. Finally, work with operations engineers to understand the monitoring implications. This is your strategy: a list of components which make the most sense to convert and deploy closer to the user.

Developing a strategy for component migration closer to the end user is just one step in becoming “cloud native.” If you want an end-to-end monitoring solution which supports cloud-based workflows, you should check out VirtualNOC.